Writing functional code in C++ III – Performance of different allocation schemes

Luca Bolognese -Now we know how to represent records and we know how to operate on them using a nice F# like syntax. But how do we store our record in a data structure in the first place?

Code for this post is here. Thanks to Andy Sawyer and Steve Bower for reviewing this.

As it is often the case, C++ gives you many options that are not available in standard functional languages. A mixed blessing of some sort.

- You can store them by value or by pointer

- If you store them by pointer, you can use normal pointers or smart pointers

- If you store them by smart pointer, you can use different ones

Well, storing them by value is the simplest option, but it has two shortcomings:

- You pay the price of a full record copy whenever the object need to be copied (i.e. when you add/remove records to/from a vector and whenever the vector needs to resize itself)

- If you store a record in two containers, you get two different copies of it. If you modify one, the other won’t be modified

The second issue is not a problem in our scenario as records, by definition, are immutable and structurally equivalent (aka equality for them doesn’t mean pointer equality). The first issue is more tricky. Our records get a copy constructor by default which does a ‘memcpy’ of the object. That should be fast. But fast enough?

To answer this question (and more), I embarked in a little performance test that encompasses most ways to store a pod type in an vector like data structure. For all my test I used this struct:

struct Record { int Id; char k1[2]; char k2[2]; char k3[2]; char k4[2]; char k5[2]; char k6[2]; char mem[bigBlock]; void Lock() {} void Unlock() {} };

By recompiling with different values for ‘bigBlock’ I can test what happens when the record size grows. In all tests a record is initialized with this function:

void record_init(Record& r, int i) { r.Id = i; strcpy(r.k1, "0"); strcpy(r.k2, "0"); strcpy(r.k3, "0"); strcpy(r.k4, "0"); strcpy(r.k5, "0"); strcpy(r.k6, "0"); memset(r.mem, '-', bigBlock); }

The tests are specific to the scenario I care about: performing functional-like operations on a Range:

struct to_int { typedef int result_type; int operator() (const Record& r) const { return r.Id; }; }; function<bool (const Record&)> filter_f = [](const Record& r) {return r.Id < filterNo;}; template <class Range> int accumulate_filter(const Range& r) { return boost::accumulate( r | filtered(filter_f) | transformed(to_int()), 0, plus<int>()); }

The usage of a function and a functor is a bit odd. I don’t recall why I did it that way, but it doesn’t matter as it is the same for each test. What the test does is just filtering a bunch of record, transforming them (map) to ints and sum these ints.

How many repetitions of each test are used, how big is the record, how many records the Range contains is specified in these constants:

const int repetitions = 1000; const int bigBlock = 1000; const int howMany = 1000;

Time is kept using this clock that wraps boost::chrono:

typedef boost::chrono::process_cpu_clock the_clock; struct timer { timer(): clock_(the_clock::now()) {} the_clock::times elapsed() { return (the_clock::now() - clock_).count(); } the_clock::time_point clock_; };

I have tested the following configurations. Adding records by value:

int normal() { vector<Record> v; for (int i = 0; i < howMany; ++i) { Record r; record_init(r, i); v.push_back(r); } return accumulate_filter(v); }

I then tested adding records using a pod_vector. This is a data structure described here and in “Imperfect C++”. It is a vector that uses as an auto_buffer as the underlying storage. An auto_buffer is a class that uses stack memory if it needs more than a certain number of bytes specified at compile time, otherwise it uses heap memory. It works well if you know at compile time that most allocations for something take at most N bytes, but some might need more. This situation is surprisingly frequent. Unfortunately, your objects need to be POD to use the pod_vector.

I tested it in the case where the stack space is big enough (podVector<howMany*2>) and when it is not (podVector<howMany/10>).

int podVector() { pod_vector<Record, size> v; for (int i = 0; i < howMany; ++i) { Record r; record_init(r, i); v.push_back(r); } return accumulate_filter(v); }

I then tested just allocating the memory, without freeing it and using boost::pool in it’s ‘local’ form, which means that memory is freed when it goes out of scope (stack-like). The main drawback of the latter is that you cannot return the container/range.

int boostallocator(WhichOne which) { boost::pool<> p(sizeof(Record)); vector<Record*> v; Record* r; for (int i = 0; i < howMany; ++i) { if(which == Boost) r = (Record*)p.malloc(); // memory freed at function exit else r = new Record; // oops, memory leak record_init(*r, i); v.push_back(r); } return accumulate_filter(v | indirected); }

Indirected is needed because we are not talking about pointers. I also tested various variations of shared pointers. First a normal shared_ptr, then a shared_ptr initialized with the boost::singleton_pool and finally a pointer initialized with make_shared.

int pointers(WhichOne which) { vector<shared_ptr<Record>> v; for (int i = 0; i < howMany; ++i) { shared_ptr<Record> r; if(which == Normal) r = shared_ptr<Record>(new Record); else if(which == Boost) r = shared_ptr<Record>((Record*)record_pool::malloc(), [](void* p) {record_pool::free(p);}); else if(which == Make) r = make_shared<Record>(); else throw "This kind of pointer doesn't exist"; record_init(*r, i); v.push_back(r); } return accumulate_filter(v | indirected); }

Finally, I used a Loki smart pointer. This is a very elegantly designed smart pointers from “Modern C++ design”. You pass as template parameters the policies you want your smart pointer to have (aka how it should behave). I tested it like so:

typedef Loki::SmartPtr<Record, Loki::RefCounted, Loki::DisallowConversion, Loki::AssertCheck, Loki::DefaultSPStorage, LOKI_DEFAULT_CONSTNESS> RecordPtr;

And using the following, slightly more convoluted code:

int lokipointers(WhichOne) { vector<RecordPtr> v; for (int i = 0; i < howMany; ++i) { RecordPtr r = RecordPtr(new Record()); record_init(*r, i); v.push_back(r); } auto ret = accumulate_filter(v | transformed(RecordPtr::GetPointer) | indirected); return ret; }

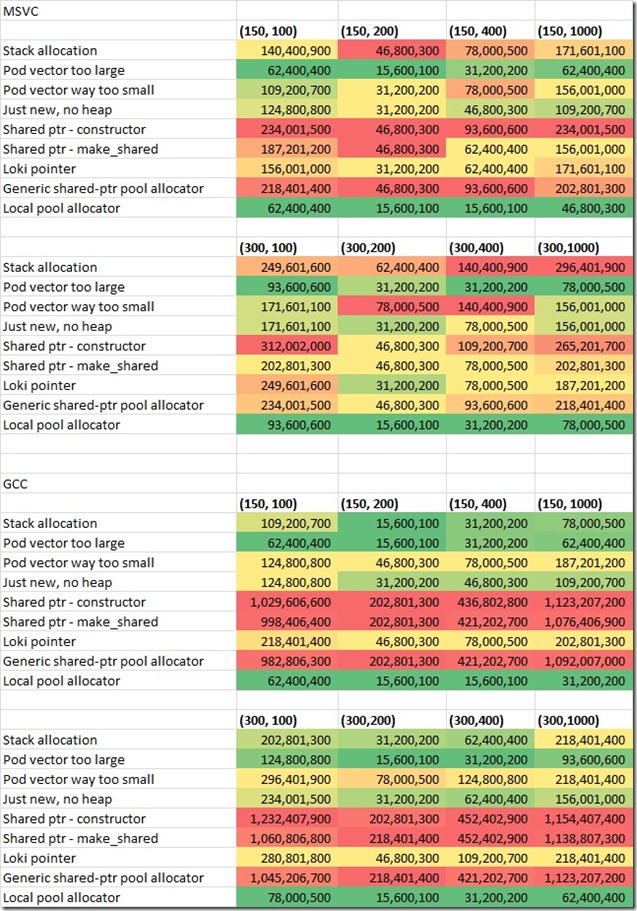

The result of my tests are in this table and at this link. I used VS10 and gcc 4.6.2 on a Windows 7 box. The first number in the (S, N) pair at the top of each column represents the size of the record, the second one represents the number of objects in the vector. To obtain reasonable numbers, the tests have been run with a different number of iteration for each pair, which means that you can compare the results vertically, but not horizontally.

There are a lot of interesting things in this table. Here are my takeaways. They are obviously specific to this single scenario. You might have different scenarios or different takeaways:

- Stack allocation is not too bad for small objects, especially for gcc

- Pod_vector is a good overall performer (even if you don’t get the size right), it is unfortunate that just works with POD types

- GCC seems to be lagging on the shared_ptr front. Also msvc implementation of the make_shared optimization gives a visible advantage

- There is not much advantage in using the shared pointer with the boost pooled allocator

- If you can use the boost local pool allocator, you should. It is faster than stack allocation (in this scenario). Remember that the memory is reclaimed when you exit the function …

So here you have it. A small detour on the performance of different schemes for allocating pod types. As it is often the case in C++, it depends …

BTW: Andy Sawyer told me about his rough algorithm to decide which STL container to use. I reproduce it here:

BEGIN

A decision tree for selecting a sequence container:

-

I’m in a rush and don’t want to read the rest: use std::deque

-

Do we know ahead of time exactly how many elements will be needed (and will they all fit on our stack!) - If so, use std::array.

-

Do we need constant-time random access? (Note that we often think we do, but actually don’t - YAGNI) If so, then we can eliminate std::list/std::forward_list as candidates.

-

Do we need bidirectional iteration? (Again, we need this less often that we think we do). If so, that eliminates std::forward_list

-

Will there be a large number of in-the-middle insertion/removal? (and assuming we haven’t already eliminated them) then std::list/std::forward_list (especially when the contained type is expensive to move/copy). In extreme cases, this may be a strong enough requirement to override other constraints (e.g. the need for random access). On the other hand, it may be viable to reduce the cost of move/copy of large contained types by using containers of (smart) pointers.

-

Do we need the contents as a contiguous array? use std::vector (and call reserve if we can) - sometimes, anyway; I’ve seen cases where it’s faster to build my collection as a std::deque, then transform to std::vector later on.)

-

Use std::deque.

Or, to put it another way, “use std::deque unless there’s a good reason not to”. That’s probably an overly-strong statement, but it’s a reasonable starting point.

Naturally, every case will be different - and no set of ‘rules of thumb’ is going to work every time (this is one of the things which distinguish rules of thumb from laws of physics). Commonly, we don’t know ahead of time which of those criteria is going to have the most significant impact on what we’re doing; when I find myself in that situation, I’ll use std::deque; once I have a firmer idea of the criteria, I’ll go back and revisit that choice (which — quite often — is as simple as changing a typedef). And of course - as with all ‘optimisations’ - measure, measure and measure again!

END

Next time, we’ll go back to more traditional functional programming topics.

Tags

- C++

- FUNCTIONAL